Why WebXR and Web Bluetooth make a great combo

It’s an exciting time for interacting with physical devices via the web — and also for the Immersive Web. The former just received a boost last week with Web Bluetooth support arriving in the stable Chrome browser on Windows. For the latter, last month saw the announcement of the formation of the W3C Immersive Web Working Group, to continue the work bringing high-performance Virtual Reality and Augmented Reality to the open Web.

There are also exciting opportunities for combining physical devices and the Immersive Web together. Not only are they both important examples of the larger trend we’re seeing around our physical and digital worlds merging, but they complement each other particularly well. This was the topic that Diego González and I spoke about at the recent GDG DevFest in Ukraine and Heapcon in Serbia.

Why combine IoT and XR?

The proliferation of connected devices and sensors is leading to a huge rise in the amount of data we’re collecting about the world around us. As it grows, understanding this data will become ever harder. While it’s possible to leave much of the data-crunching to machines, there are inherent dangers in trusting systems which are inscrutable to humans. It will be much better if we can find enhanced ways to visualise and understand the data ourselves. This is where VR and AR can step in. As “the grandfather of VR” Jaron Lanier has said:

“VR is amazing at conveying complexity with lucidity.”

And as neuroscientist Beau Cronin has described, there’s nothing more natural for us than a 4-Dimensional world, since we’ve all grown accustomed to inhabiting one since birth. By representing data as virtual worlds, we can unlock our full potential as data detectives, enabling us to better spot anomalies and trends. Augmented and Mixed Reality also have huge potential since they can enhance our real world with key data and controls, in the right place, at the right time.

Early examples include experiencing investment portfolios as digital cities and stock prices as virtual rollercoasters. Here at Samsung we have also experimented with an interactive dress and accompanying Virtual Reality experience visualising noise pollution and a VR application for visualising and controlling a sensor-laden lego bridge.

Why the web?

Our regular readers will be familiar with our arguments for web technologies, but for those who might not have considered the web as a potential platform for physical and immersive technologies…

- There are a huge number of web developers out there. The latest Stack Overflow Developer Survey found that the top three most popular technologies are JavaScript, HTML and CSS. Empowering web developers to quickly dive into IoT and XR using familiar technologies can help to unlock a vast potential for innovation.

- The Web lets us dive right in, with as little friction as possible. Web applications are only a tap away — all we need is a URL. Using Progressive Enhancement we can cater for all types of devices, even accommodating the literal new dimension of XR.

Our mashup

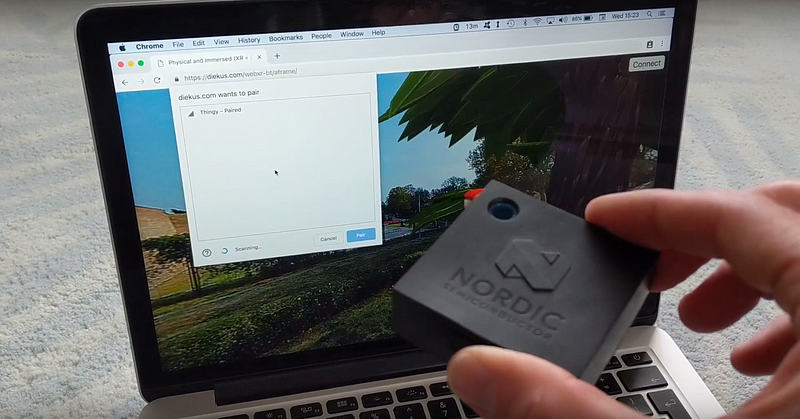

For our demonstration, Diego and I created a web-based VR experience which uses real-time sensor data from a Nordic Thingy, a small multi-sensor device accessible via Bluetooth Low Energy. In other words, we created a virtual world which adapts in real-time to our physical world.

Here’s how it looks (presented in a laptop browser, but the scene could be viewed in VR too):

The demo includes:

- Dynamic rain linked to the real-world humidity percentage that we obtain from the Thingy ?️

- Background lighting which adapts to the values we receive from the light/colour sensor ?

- A parrot which nods along according to the Thingy’s orientation data ?

- And finally — as a fun bonus — pressing the button on the Thingy makes the parrot sqwawk! ?️

The demo is a website, built entirely around open web technologies and emerging standards. Using A-Frame allowed us to enable Virtual Reality by default, using WebVR. Using Thingy.js allowed us to connect to the Nordic device over Web Bluetooth and receive its data.

Although we admit this is probably not the most practical example(!) we hope our demo might give you some ideas for combining physical devices and the Immersive Web together. We can start to think of new ways to visualise and enhance our real-world data — ways that are natural, absorbing and even fun!

Here are our slides from GDG DevFest and here are our slides from Heapcon. The source code for the demo is here. The talks were recorded and we’ll share the video links here when they are available. If you have any feedback or ideas or examples you’d like to share with us, please leave a comment! Thank you to Lars Knudsenand Kenneth Christiansen for their help with the Thingy 🙂

Peter O’Shaughnessy

Can I call myself a “Web veteran” yet? Mastodon: @peter@toot.cafe. Twitter: @poshaughnessy

Samsung Internet Developers

Writings from the Samsung Internet Developer Relations Team. For more info see our disclaimer: https://samsunginter.net/about-blog